Talk by L. Chong: Zero-Shot Semantic Segmentation for Robots in Agriculture or “weed are wired”

Y. L. Chong, L. Nunes, F. Magistri, X. Zhong, J. Behley, and C. Stachniss, “Zero-Shot Semantic Segmentation for Robots in Agriculture,” in Proc. of the IEEE/RSJ Intl. Conf. on Intelligent Robots and Systems (IROS), 2025. Paper: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/chong2025iros.pdf Code: https://github.com/PRBonn/WeedsAreWeird

Talk by N. Trekel: Benchmark for Evaluating Long-Term Localization in Indoor Environments (IROS’25)

N. Trekel, T. Guadagnino, T. Läbe, L. Wiesmann, P. Aguiar, J. Behley, and C. Stachniss, “Benchmark for Evaluating Long-Term Localization in Indoor Environments Under Substantial Static and Dynamic Scene Changes,” in Proc. of the IEEE/RSJ Intl. Conf. on Intelligent Robots and Systems (IROS), 2025. Paper: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/trekel2025iros.pdf Data: https://www.ipb.uni-bonn.de/html/projects/localization_benchmark/ CodaBench Challenge: https://www.codabench.org/competitions/8889/

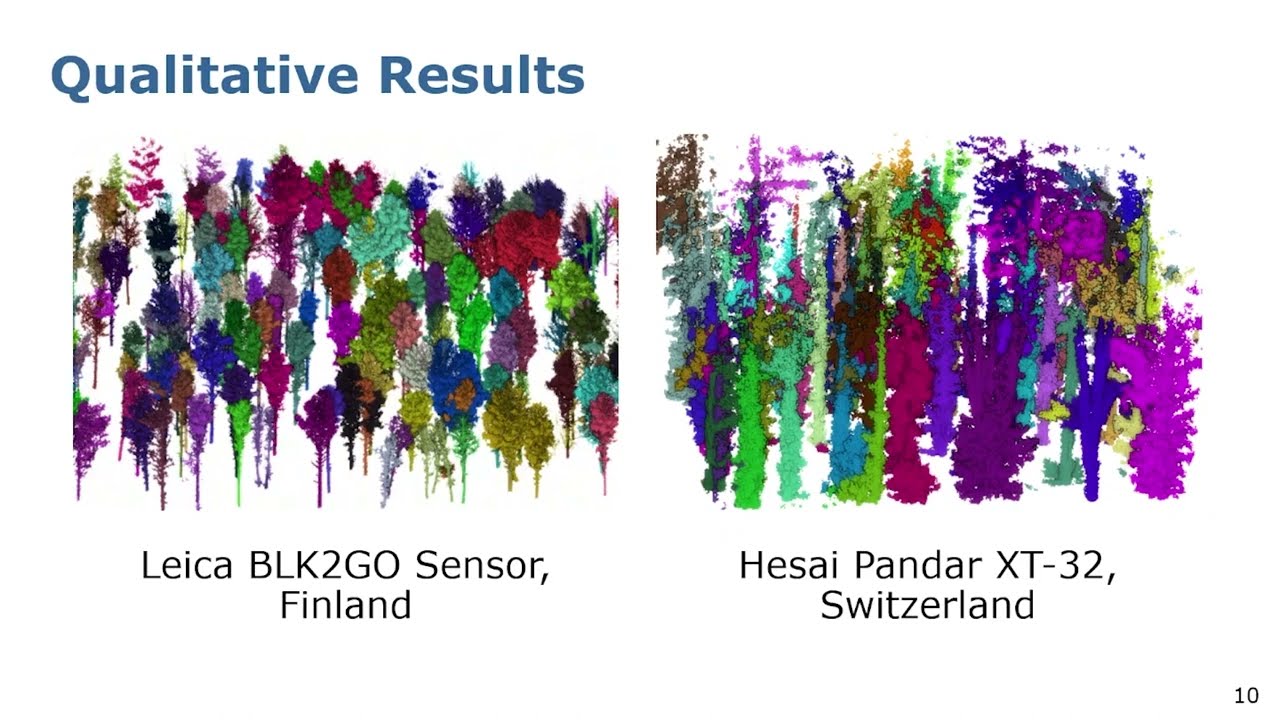

Talk by M. Sodano: 3D Hierarchical Panoptic Segmentation in Real Orchard Environments … (IROS’25)

Paper: M. Sodano, F. Magistri, E. A. Marks, F. A. Hosn, A. Zurbayev, R. Marcuzzi, M. V. R. Malladi, J. Behley, and C. Stachniss, “3D Hierarchical Panoptic Segmentation in Real Orchard Environments Across Different Sensors,” in Proc. of the IEEE/RSJ Intl. Conf. on Intelligent Robots and Systems (IROS), 2025. Paper: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/sodano2025iros.pdf Code: https://github.com/PRBonn/hapt3D Data: https://www.ipb.uni-bonn.de/data/hops/

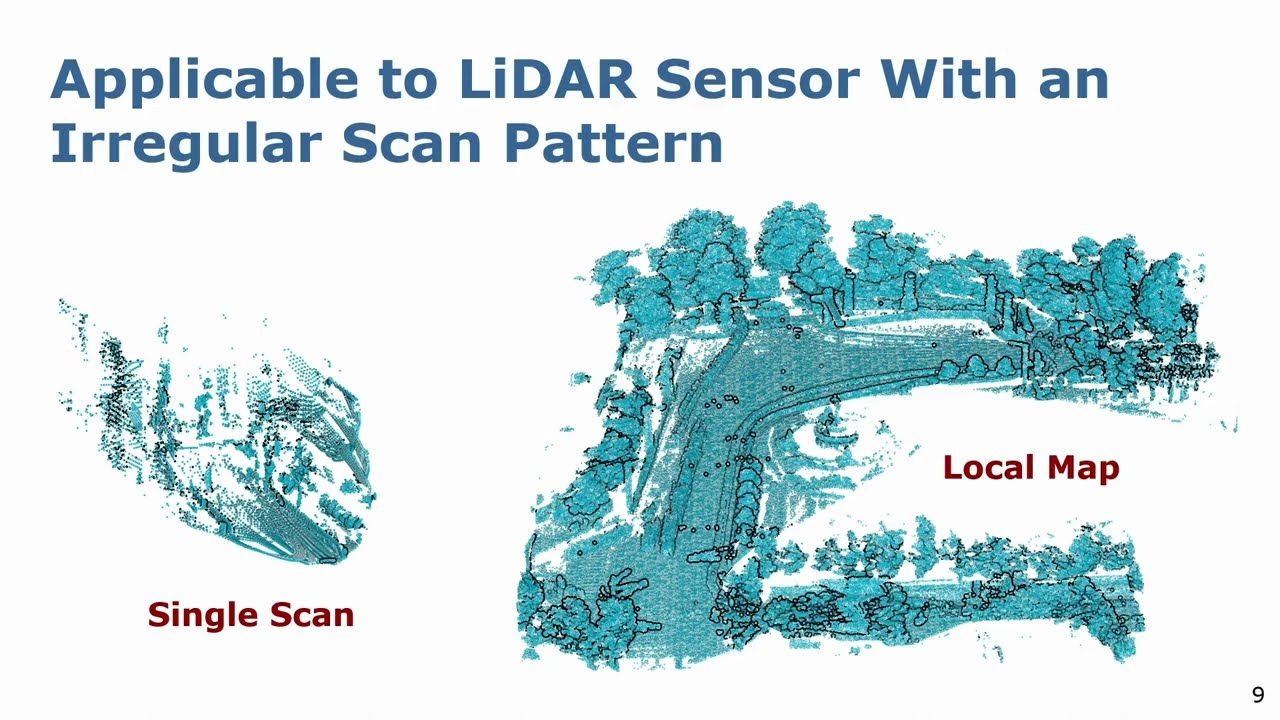

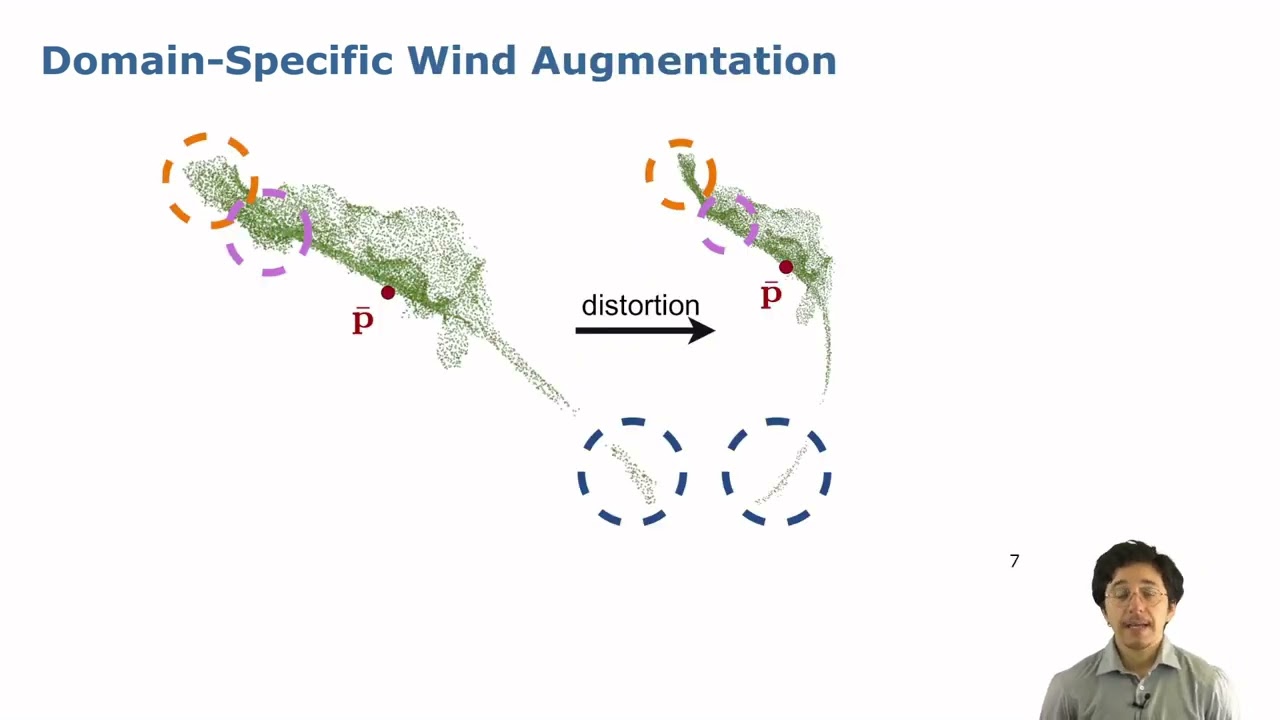

PhenoRob PhD Graduate Talks: Benedikt Mersch

Benedikt Mersch gives a talk on “Spatio-Temporal Perception for Mobile Robots in Dynamic Environments” after successfully completing his PhD as part of PhenoRob. In the PhenoRob PhD Graduate Talks, recent PhenoRob graduates share about their research within the Cluster of Excellence.

Talk by Casado Herraez: SPR: Single-Scan Radar Place Recognition (RAL’24/ICRA’25)

Talk about the paper: D. Casado Herraez, L. Chang, M. Zeller, L. Wiesmann, J. Behley, M. Heidingsfeld, and C. Stachniss, “SPR: Single-Scan Radar Place Recognition,” IEEE Robotics and Automation Letters (RA-L), vol. 9, iss. 10, pp. 9079-9086, 2024. PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/casado-herraez2024ral.pdf

Talk by Y. Pan: PINGS Gaussian Splatting Meets Distance Fields (RSS2025)

RSS 2025 Talk by Yue Pan for the paper Y. Pan, X. Zhong, L. Jin, L. Wiesmann, M. Popović, J. Behley, and C. Stachniss, “PINGS: Gaussian Splatting Meets Distance Fields within a Point-Based Implicit Neural Map,” in Proc. of Robotics: Science and Systems (RSS), 2025. PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/pan2025rss.pdf Code: https://github.com/PRBonn/PINGS

Talk by C. Stachniss on How to Write Good Papers (IROS2024 Young Professionals Invited Talk)

Invited Talk by Cyrill Stachniss at IROS 2024 for the Young Professionals. Stephany Berrio Perez recorded, edited, and provided the video.

Talk by L. Lobefaro: Spatio-Temporal Consistent Mapping of Growing Plants for Agricultural Robots…

IROS 2024 Talk by Luca Lobefaro about the paper: L. Lobefaro, M. V. R. Malladi, T. Guadagnino, and C. Stachniss, “Spatio-Temporal Consistent Mapping of Growing Plants for Agricultural Robots in the Wild,” in Proc. of the IEEE/RSJ Intl. Conf. on Intelligent Robots and Systems (IROS), 2024. PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/lobefaro2024iros.pdf CODE: https://github.com/PRBonn/spatio-temporal-mapping

Talk by L. Nunes: Scaling Diffusion Models to Real-World 3D LiDAR Scene Completion (CVPR’24)

CVPR 2024 Talk by Lucas Nunes about the paper: L. Nunes, R. Marcuzzi, B. Mersch, J. Behley, and C. Stachniss, “Scaling Diffusion Models to Real-World 3D LiDAR Scene Completion,” in Proc. of the IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), 2024. PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/nunes2024cvpr.pdf CODE: https://github.com/PRBonn/LiDiff

Talk by X. Zhong: 3D LiDAR Mapping in Dynamic Environments using a 4D Implicit Neural Rep. (CVPR’24)

CVPR 2024 Talk by X. Zhong about the paper: X. Zhong, Y. Pan, C. Stachniss, and J. Behley, “3D LiDAR Mapping in Dynamic Environments using a 4D Implicit Neural Representation,” in Proc. of the IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), 2024. PAPER: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/zhong2024cvpr.pdf CODE: https://github.com/PRBonn/4dNDF

Talk by M. Sodano: Open-World Semantic Segmentation Including Class Similarity (CVPR’24)

CVPR 2024 Talk by Matteo Sodano about the paper: M. Sodano, F. Magistri, L. Nunes, J. Behley, and C. Stachniss, “Open-World Semantic Segmentation Including Class Similarity,” in Proc. of the IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), 2024. PAPER: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/sodano2024cvpr.pdf CODE: https://github.com/PRBonn/ContMAV

Talk by E. Marks: High Precision Leaf Instance Segmentation in Point Clouds Obtained Under Real…

Talk about the paper: E. Marks, M. Sodano, F. Magistri, L. Wiesmann, D. Desai, R. Marcuzzi, J. Behley, and C. Stachniss, “High Precision Leaf Instance Segmentation in Point Clouds Obtained Under Real Field Conditions,” IEEE Robotics and Automation Letters (RA-L), vol. 8, iss. 8, pp. 4791-4798, 2023. doi:10.1109/LRA.2023.3288383 PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/marks2023ral.pdf CODE: https://github.com/PRBonn/plant_pcd_segmenter VIDEO: https://youtu.be/dvA1SvQ4iEY TALK: https://youtu.be/_k3vpYl-UW0

Talk by D. Casado Herraez: Radar-Only Odometry and Mapping for Autonomous Vehicles (ICRA’2024)

Talk at ICRA’2024 about the paper: D. Casado Herraez, M. Zeller, L. Chang, I. Vizzo, M. Heidingsfeld, and C. Stachniss, “Radar-Only Odometry and Mapping for Autonomous Vehicles,” in Proc. of the IEEE Intl. Conf. on Robotics & Automation (ICRA), 2024. PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/casado-herraez2024icra.pdf

Talk by S. Gupta: Effectively Detecting Loop Closures using Point Cloud Density Maps (ICRA’2024)

Talk at ICRA’2024 about the paper: S. Gupta, T. Guadagnino, B. Mersch, I. Vizzo, and C. Stachniss, “Effectively Detecting Loop Closures using Point Cloud Density Maps,” in Proc. of the IEEE Intl. Conf. on Robotics & Automation (ICRA), 2024. PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/gupta2024icra.pdf CODE: https://github.com/PRBonn/MapClosures

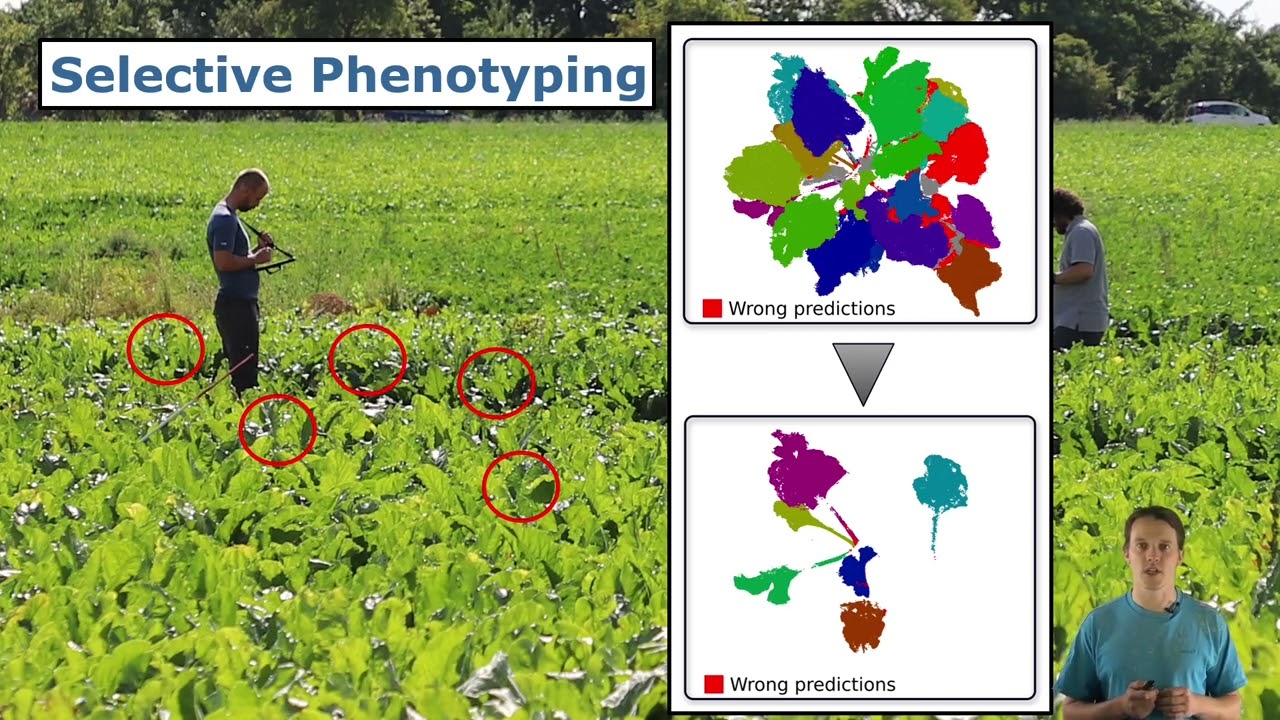

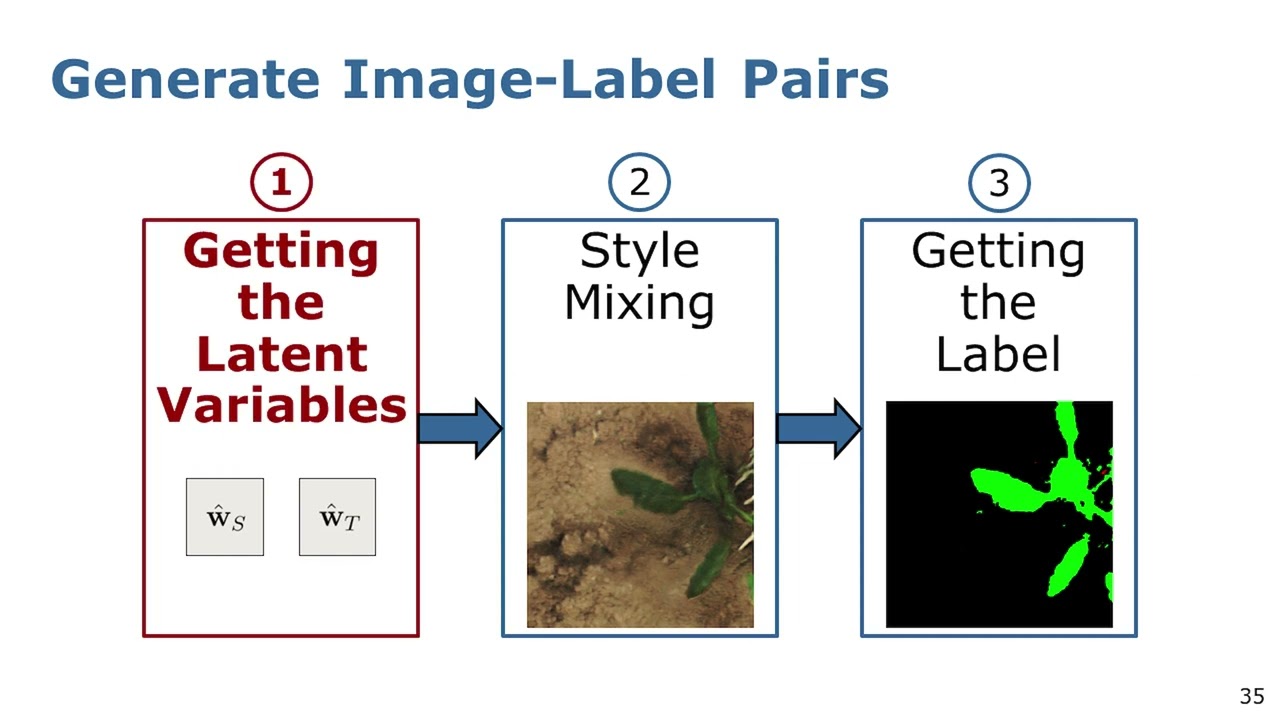

Talk by L. Chong: Unsupervised Generation of Labeled Training Images for Crop-Weed Segmentation…

Talk about the paper: Y. L. Chong, J. Weyler, P. Lottes, J. Behley, and C. Stachniss, “Unsupervised Generation of Labeled Training Images for Crop-Weed Segmentation in New Fields and on Different Robotic Platforms,” IEEE Robotics and Automation Letters (RA-L), vol. 8, iss. 8, p. 5259–5266, 2023. doi:10.1109/LRA.2023.3293356 PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/chong2023ral.pdf CODE: https://github.com/PRBonn/StyleGenForLabels

Trailer: LocNDF: Neural Distance Field Mapping for Robot Localization (RAL’24)

Paper trailer for the work: L. Wiesmann, T. Guadagnino, I. Vizzo, N. Zimmerman, Y. Pan, H. Kuang, J. Behley, and C. Stachniss, “LocNDF: Neural Distance Field Mapping for Robot Localization,” IEEE Robotics and Automation Letters (RA-L), vol. 8, iss. 8, p. 4999–5006, 2023. doi:10.1109/LRA.2023.3291274 PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/wiesmann2023ral-icra.pdf CODE: https://github.com/PRBonn/LocNDF

Talk by L. Wiesmann: , “LocNDF: Neural Distance Field Mapping for Robot Localization (RAL-ICAR’24)

Talk about the paper: L. Wiesmann, T. Guadagnino, I. Vizzo, N. Zimmerman, Y. Pan, H. Kuang, J. Behley, and C. Stachniss, “LocNDF: Neural Distance Field Mapping for Robot Localization,” IEEE Robotics and Automation Letters (RA-L), vol. 8, iss. 8, p. 4999–5006, 2023. doi:10.1109/LRA.2023.3291274 PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/wiesmann2023ral-icra.pdf CODE: https://github.com/PRBonn/LocNDF

Talk by G. Roggiolani: Unsupervised Pre-Training for 3D Leaf Instance Segmentation (RAL-ICRA’24)

Talk about the paper: G. Roggiolani, F. Magistri, T. Guadagnino, J. Behley, and C. Stachniss, “Unsupervised Pre-Training for 3D Leaf Instance Segmentation,” IEEE Robotics and Automation Letters (RA-L), vol. 8, pp. 7448-7455, 2023. doi:10.1109/LRA.2023.3320018 PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/roggiolani2023ral.pdf CODE: https://github.com/PRBonn/Unsupervised-Pre-Training-for-3D-Leaf-Instance-Segmentation

Talk by M. Malladi: Tree Instance Segmentation and Traits Estimation for Forestry Environments…

ICRA’2024 Talk by Meher Malladi about the paper: M. V. R. Malladi, T. Guadagnino, L. Lobefaro, M. Mattamala, H. Griess, J. Schweier, N. Chebrolu, M. Fallon, J. Behley, and C. Stachniss, “Tree Instance Segmentation and Traits Estimation for Forestry Environments Exploiting LiDAR Data ,” in Proc. of the IEEE Intl. Conf. on Robotics & Automation (ICRA), 2024. PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/malladi2024icra.pdf

Talk by F. Magistri: Efficient and Accurate Transformer-Based 3D Shape Completion and Reconstruction

ICRA’24 Talk by F. Magistri about the paper: F. Magistri, R. Marcuzzi, E. A. Marks, M. Sodano, J. Behley, and C. Stachniss, “Efficient and Accurate Transformer-Based 3D Shape Completion and Reconstruction of Fruits for Agricultural Robots,” in Proc. of the IEEE Intl. Conf. on Robotics & Automation (ICRA), 2024. Paper Trailer: https://youtu.be/U1xxnUGrVL4 ICRA’24 Talk: https://youtu.be/JKJMEC6zfHE PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/magistri2024icra.pdf Code (to be released soon): https://github.com/PRBonn/TCoRe

Talk by R. Marcuzzi: Mask4D: End-to-End Mask-Based 4D Panoptic Segmentation for LiDAR Data (ICRA’24)

ICRA’24 Talk for the paper: R. Marcuzzi, L. Nunes, L. Wiesmann, E. Marks, J. Behley, and C. Stachniss, “Mask4D: End-to-End Mask-Based 4D Panoptic Segmentation for LiDAR Sequences,” IEEE Robotics and Automation Letters (RA-L), vol. 8, iss. 11, pp. 7487-7494, 2023. doi:10.1109/LRA.2023.3320020 PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/marcuzzi2023ral-meem.pdf CODE: https://github.com/PRBonn/Mask4D

Talk by B. Mersch: Building Volumetric Beliefs for Dynamic Environments Exploiting MOS (RAL’23)

Talk by Benedikt Mersch about the paper: B. Mersch, T. Guadagnino, X. Chen, I. Vizzo, J. Behley, and C. Stachniss, “Building Volumetric Beliefs for Dynamic Environments Exploiting Map-Based Moving Object Segmentation,” IEEE Robotics and Automation Letters (RA-L), vol. 8, iss. 8, p. 5180–5187, 2023. doi:10.1109/LRA.2023.3292583 PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/mersch2023ral.pdf:

Talk by M. Zeller: Radar Tracker: Moving Instance Tracking in Sparse and Noisy Radar Data (ICRA’24)

Talk by Matthias Zeller on M. Zeller, D. Casado Herraez, J. Behley, M. Heidingsfeld, and C. Stachniss, “Radar Tracker: Moving Instance Tracking in Sparse and Noisy Radar Point Clouds,” in Proc. of the IEEE Intl. Conf. on Robotics & Automation (ICRA), 2024. PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/zeller2024icra.pdf

Talk by M. Zeller: Radar Instance Transformer: Reliable Moving Instance Segmentation 4 Radar (T-RO)

Talk by Matthias Zeller on the paper: M. Zeller, V. S. Sandhu, B. Mersch, J. Behley, M. Heidingsfeld, and C. Stachniss, “Radar Instance Transformer: Reliable Moving Instance Segmentation in Sparse Radar Point Clouds,” IEEE Transactions on Robotics (TRO), 2024. PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/zeller2024tro.pdf

Talk by R. Marcuzzi: Mask-Based Panoptic LiDAR Segmentation for Autonomous Driving (RAL’23)

IROS’23 Talk for the paper: R. Marcuzzi, L. Nunes, L. Wiesmann, J. Behley, and C. Stachniss, “Mask-Based Panoptic LiDAR Segmentation for Autonomous Driving,” IEEE Robotics and Automation Letters (RA-L), vol. 8, iss. 2, p. 1141–1148, 2023. doi:10.1109/LRA.2023.3236568 PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/marcuzzi2023ral.pdf

Talk by Y. Pan: Panoptic Mapping with Fruit Completion and Pose Estimation … (IROS’23)

IROS’23 Talk for the paper: Y. Pan, F. Magistri, T. Läbe, E. Marks, C. Smitt, C. S. McCool, J. Behley, and C. Stachniss, “Panoptic Mapping with Fruit Completion and Pose Estimation for Horticultural Robots,” in Proc. of the IEEE/RSJ Intl. Conf. on Intelligent Robots and Systems (IROS), 2023. PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/pan2023iros.pdf CODE: https://github.com/PRBonn/HortiMapping

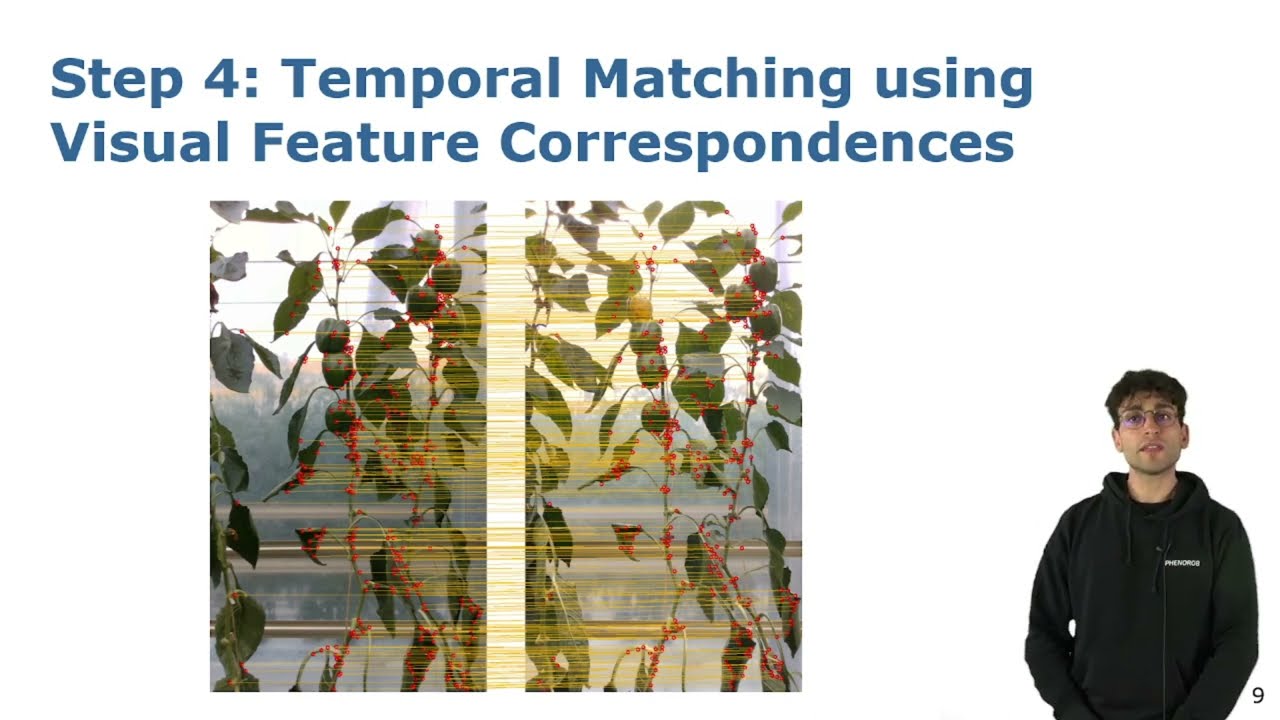

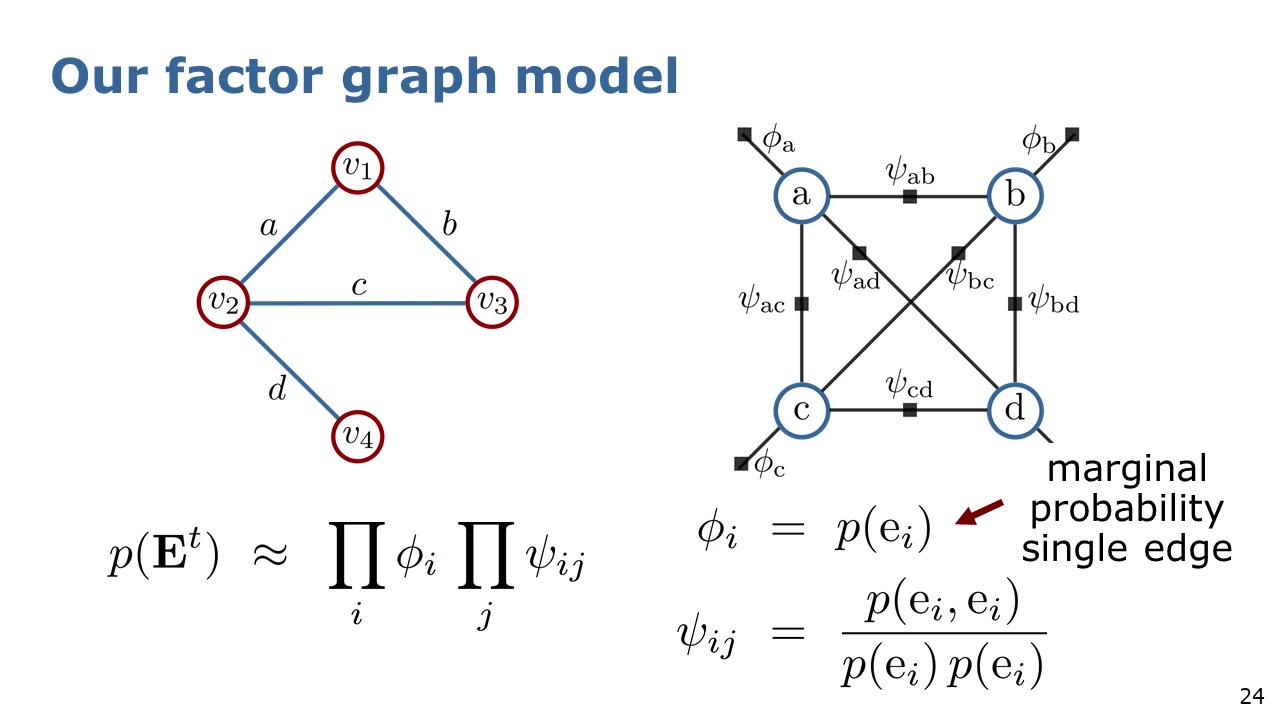

Talk by L. Lobefaro: Estimating 4D Data Associations Towards Spatial-Temporal Mapping … (IROS’23)

IROS’23 Talk for the paper: L. Lobefaro, M. V. R. Malladi, O. Vysotska, T. Guadagnino, and C. Stachniss, “Estimating 4D Data Associations Towards Spatial-Temporal Mapping of Growing Plants for Agricultural Robots,” in Proc. of the IEEE/RSJ Intl. Conf. on Intelligent Robots and Systems (IROS), 2023. PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/lobefaro2023iros.pdf CODE: https://github.com/PRBonn/plants_temporal_matcher

Talk by I. Vizzo: KISS-ICP: In Defense of Point-to-Point ICP (RAL-IROS’23)

RAL-IROS’23 Talk for the paper: Vizzo, T. Guadagnino, B. Mersch, L. Wiesmann, J. Behley, and C. Stachniss, “KISS-ICP: In Defense of Point-to-Point ICP – Simple, Accurate, and Robust Registration If Done the Right Way,” IEEE Robotics and Automation Letters (RA-L), vol. 8, iss. 2, pp. 1-8, 2023. doi:10.1109/LRA.2023.3236571 PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/vizzo2023ral.pdf CODE: https://github.com/PRBonn/kiss-icp

Talk by M. Zeller: Gaussian Radar Transformer for Semantic Segmentation in Noisy Radar Data (RAL)

RAL-IROS’23 Talk about the paper: M. Zeller, J. Behley, M. Heidingsfeld, and C. Stachniss, “Gaussian Radar Transformer for Semantic Segmentation in Noisy Radar Data,” IEEE Robotics and Automation Letters (RA-L), vol. 8, iss. 1, p. 344–351, 2023. doi:10.1109/LRA.2022.3226030 PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/zeller2023ral.pdf

Talk by Sodano & Zimmerman: Constructing Metric-Semantic Maps using Floor Plan Priors… (IROS’23)

IROS’23 Talk for the paper: N. Zimmerman, M. Sodano, E. Marks, J. Behley, and C. Stachniss, “Constructing Metric-Semantic Maps using Floor Plan Priors for Long-Term Indoor Localization,” in Proc. of the IEEE/RSJ Intl. Conf. on Intelligent Robots and Systems (IROS), 2023. PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/zimmerman2023iros.pdf CODE: https://github.com/PRBonn/SIMP

Talk by L. Nunes: Temporal Consistent 3D Representation Learning for Semantic Perception.. (CVPR’23)

CVPR’23 Talk about the paper: L. Nunes, L. Wiesmann, R. Marcuzzi, X. Chen, J. Behley, and C. Stachniss, “Temporal Consistent 3D LiDAR Representation Learning for Semantic Perception in Autonomous Driving,” in Proc. of the IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), 2023. PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/nunes2023cvpr.pdf CODE: https://github.com/PRBonn/TARL

Talk by S. Zhong: SHINE-Mapping: 3D Mapping Using Sparse Hierarchical Implicit Neural Rep. (ICRA’23)

ICRA’23 Talk about the paper: X. Zhong, Y. Pan, J. Behley, and C. Stachniss, “SHINE-Mapping: Large-Scale 3D Mapping Using Sparse Hierarchical Implicit Neural Representations,” in Proc. of the IEEE Intl. Conf. on Robotics & Automation (ICRA), 2023. PAPER: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/zhong2023icra.pdf CODE: https://github.com/PRBonn/SHINE_mapping

Talk by G. Roggiolani & M. Sodano: Hierarchical Approach for Joint Semantic, Plant Inst… (ICRA’23)

ICRA’23 Talk about the paper: G. Roggiolani, M. Sodano, F. Magistri, T. Guadagnino, J. Behley, and C. Stachniss, “Hierarchical Approach for Joint Semantic, Plant Instance, and Leaf Instance Segmentation in the Agricultural Domain,” in Proc. of the IEEE Intl. Conf. on Robotics & Automation (ICRA), 2023. PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/roggiolani2023icra-hajs.pdf CODE: https://github.com/PRBonn/HAPT

Talk by G. Roggiolani: On Domain-Specific Pre-Training for Effective Semantic Perception…(ICRA’23)

ICRA’23 Talk about the paper: G. Roggiolani, F. Magistri, T. Guadagnino, J. Weyler, G. Grisetti, C. Stachniss, and J. Behley, “On Domain-Specific Pre-Training for Effective Semantic Perception in Agricultural Robotics,” in Proc. of the IEEE Intl. Conf. on Robotics & Automation (ICRA), 2023. PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/roggiolani2023icra-odsp.pdf CODE: https://github.com/PRBonn/agri-pretraining

Talk by H. Dong: Learning-Based Dimensionality Reduction for Compact and Effective Features(ICRA’23)

ICRA’23 Talk about the paper: H. Dong, X. Chen, M. Dusmanu, V. Larsson, M. Pollefeys, and C. Stachniss, “Learning-Based Dimensionality Reduction for Computing Compact and Effective Local Feature Descriptors,” in Proc. of the IEEE Intl. Conf. on Robotics & Automation (ICRA), 2023. PAPER: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/dong2023icra.pdf

Talk by A. Riccardi: Fruit Tracking Over Time Using High-Precision Point Clouds (ICRA’23)

ICRA’23 Talk about the paper: A. Riccardi, S. Kelly, E. Marks, F. Magistri, T. Guadagnino, J. Behley, M. Bennewitz, and C. Stachniss, “Fruit Tracking Over Time Using High-Precision Point Clouds,” in Proc. of the IEEE Intl. Conf. on Robotics & Automation (ICRA), 2023. PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/riccardi2023icra.pdf

Talk by L. Wiesmann: KPPR: Exploiting Momentum Contrast for Point Cloud-Based Place Recog..(ICRA’23)

ICRA 2023 talk for the paper: L. Wiesmann, L. Nunes, J. Behley, and C. Stachniss, “KPPR: Exploiting Momentum Contrast for Point Cloud-Based Place Recognition,” IEEE Robotics and Automation Letters (RA-L), vol. 8, iss. 2, pp. 592-599, 2023. doi:10.1109/LRA.2022.3228174 PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/wiesmann2023ral.pdf CODE: https://github.com/PRBonn/kppr

Talk by M. Zeller: Radar Velocity Transformer: Single-scan MOS in Noisy Radar Point Clouds (ICRA’23)

ICRA’23 Talk about the paper: M. Zeller, V. S. Sandhu, B. Mersch, J. Behley, M. Heidingsfeld, and C. Stachniss, “Radar Velocity Transformer: Single-scan Moving Object Segmentation in Noisy Radar Point Clouds,” in Proc. of the IEEE Intl. Conf. on Robotics & Automation (ICRA), 2023. PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/zeller2023icra.pdf

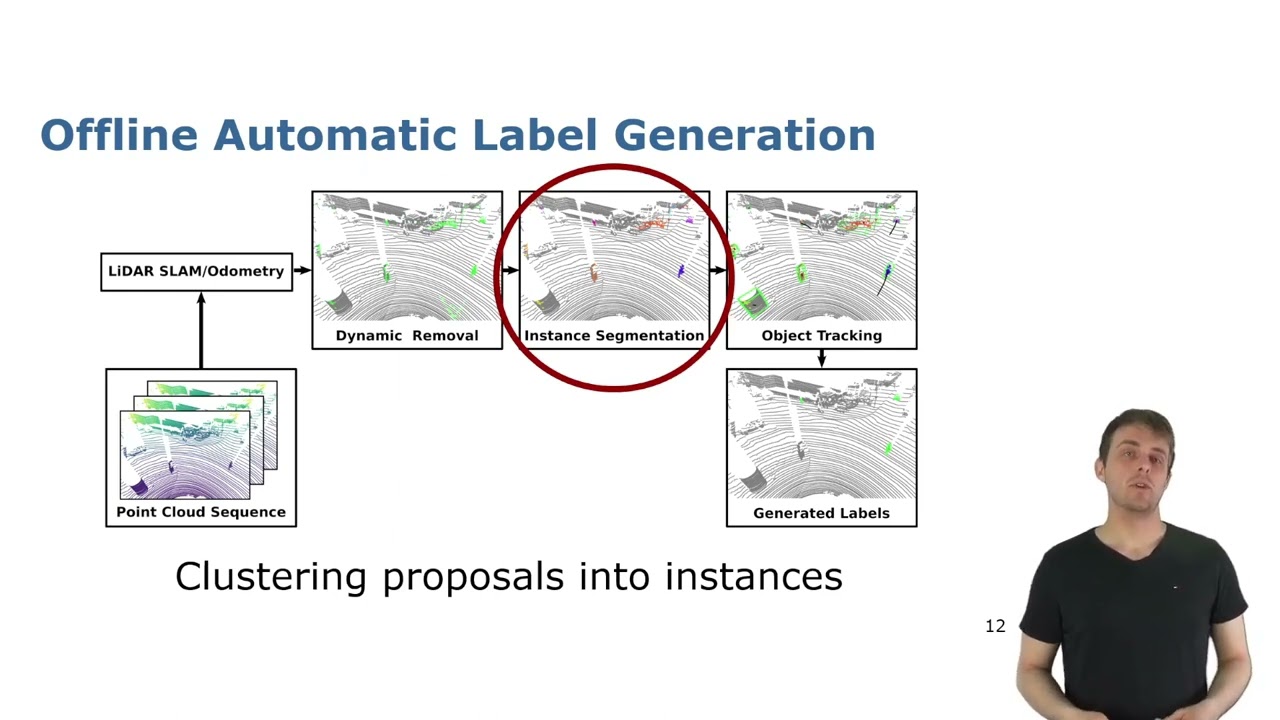

Talk by B. Mersch: Automatic Labeling to Generate Training Data for Online LiDAR MOS (RAL-ICRA’23)

ICRA 2023 Talk for the paper: X. Chen, B. Mersch, L. Nunes, R. Marcuzzi, I. Vizzo, J. Behley, and C. Stachniss, “Automatic Labeling to Generate Training Data for Online LiDAR-Based Moving Object Segmentation,” IEEE Robotics and Automation Letters (RA-L), vol. 7, iss. 3, pp. 6107-6114, 2022. doi:10.1109/LRA.2022.3166544 PDF: http://arxiv.org/pdf/2201.04501 CODE: https://github.com/PRBonn/auto-mos

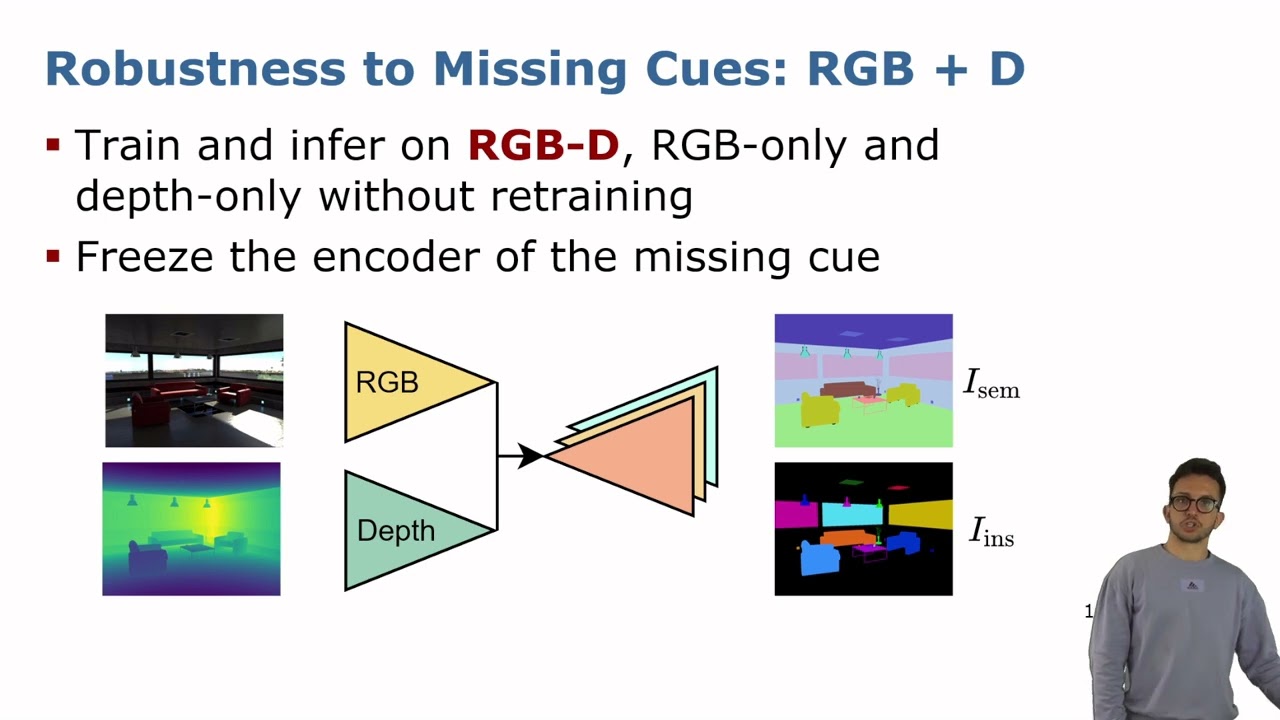

Talk by M. Sodano: Robust Double-Encoder Network for RGB-D Panoptic Segmentation, (ICRA’23)

ICRA’23 Talk about the paper: M. Sodano, F. Magistri, T. Guadagnino, J. Behley, and C. Stachniss, “Robust Double-Encoder Network for RGB-D Panoptic Segmentation,” in Proc. of the IEEE Intl. Conf. on Robotics & Automation (ICRA), 2023. PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/sodano2023icra.pdf CODE: https://github.com/PRBonn/PS-res-excite

Talk by S. Kelly: TAIM – Target-Aware Implicit Mapping for Agricultural Crop Inspection (ICRA’23)

ICRA 2023 Talk by Shane Kelly for the paper: S. Kelly, A. Riccardi, E. Marks, F. Magistri, T. Guadagnino, M. Chli, and C. Stachniss, “Target-Aware Implicit Mapping for Agricultural Crop Inspection,” in Proc. of the IEEE Intl. Conf. on Robotics & Automation (ICRA), 2023.

Talk by S. Li: Multi-scale Interaction for Real-time LiDAR Data Segmentation … (RAL-ICRA’23)

ICRA’23 Talk about the paper: S. Li, X. Chen, Y. Liu, D. Dai, C. Stachniss, and J. Gall, “Multi-scale Interaction for Real-time LiDAR Data Segmentation on an Embedded Platform,” IEEE Robotics and Automation Letters (RA-L), vol. 7, iss. 2, pp. 738-745, 2022. doi:10.1109/LRA.2021.3132059 PAPER: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/li2022ral.pdf CODE: https://github.com/sj-li/MINet

Talk by F. Magistri: 3D Shape Completion and Reconstruction for Agricultural Robots (RAL-IROS’22)

IROS 2020 Talk by Federico Magistri on F. Magistri, E. Marks, S. Nagulavancha, I. Vizzo, T. Läbe, J. Behley, M. Halstead, C. McCool, and C. Stachniss, “Contrastive 3D Shape Completion and Reconstruction for Agricultural Robots using RGB-D Frames,” IEEE Robotics and Automation Letters (RA-L), vol. 7, iss. 4, pp. 10120-10127, 2022. Paper: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/magistri2022ral-iros.pdf

Talk by Y. Pan: Voxfield: Non-Projective Signed Distance Fields (IROS’22)

IROS 2022 Talk by Yue Pan about the paper: Y. Pan, Y. Kompis, L. Bartolomei, R. Mascaro, C. Stachniss, and M. Chli, “Voxfield: Non-Projective Signed Distance Fields for Online Planning and 3D Reconstruction,” in Proc. of the IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), 2022. Paper: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/pan2022iros.pdf Code: https://github.com/VIS4ROB-lab/voxfield

Talk by Ignacio Vizzo: Make it Dense – Dense Maps from Sparse Point Clouds (RAL-IROS’22)

IROS 2022 Talk by Ignacio Vizzo: “Make it Dense: Self-Supervised Geometric Scan Completion of Sparse 3D LiDAR Scans in Large Outdoor Environments” I. Vizzo, B. Mersch, R. Marcuzzi, L. Wiesmann, J. and Behley, and C. Stachniss, “Make it Dense: Self-Supervised Geometric Scan Completion of Sparse 3D LiDAR Scans in Large Outdoor Environments,” IEEE Robotics and Automation Letters (RA-L), vol. 7, iss. 3, pp. 8534-8541, 2022. doi:10.1109/LRA.2022.3187255 Paper: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/vizzo2022ral-iros.pdf Code: https://github.com/PRBonn/make_it_dense

Talk by L. Wiesmann: DCPCR – Deep Compressed Point Cloud Registration in Large Env… (RAL-IROS’22)

IROS’2022 Talk by Louis Wiesmann about the RAL-IROS’2022 paper: L. Wiesmann, T. Guadagnino, I. Vizzo, G. Grisetti, J. Behley, and C. Stachniss, “DCPCR: Deep Compressed Point Cloud Registration in Large-Scale Outdoor Environments,” IEEE Robotics and Automation Letters (RA-L), vol. 7, iss. 3, pp. 6327-6334, 2022. doi:10.1109/LRA.2022.3171068 Paper: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/wiesmann2022ral-iros.pdf Code: https://github.com/PRBonn/DCPCR

Talk by L. Nunes: Unsupervised Class-Agnostic Instance Segmentation of 3D LiDAR Data (RAL-IROS’22)

IROS 2022 Talk by L. Nunes about the work: L. Nunes, X. Chen, R. Marcuzzi, A. Osep, L. Leal-Taixé, C. Stachniss, and J. Behley, “Unsupervised Class-Agnostic Instance Segmentation of 3D LiDAR Data for Autonomous Vehicles,” IEEE Robotics and Automation Letters (RA-L), 2022. doi:10.1109/LRA.2022.3187872 Code: https://github.com/PRBonn/3DUIS Paper: https://www.ipb.uni-bonn.de/pdfs/nunes2022ral-iros.pdf

Talk by B. Mersch: Receding Moving Object Segmentation in 3D LiDAR Data … (IROS’22)

IROS 2022 Talk by Benedikt Mersch on Receding Moving Object Segmentation in 3D LiDAR Data Using Sparse 4D Convolutions Paper: B. Mersch, X. Chen, I. Vizzo, L. Nunes, J. Behley, and C. Stachniss, “Receding Moving Object Segmentation in 3D LiDAR Data Using Sparse 4D Convolutions,” IEEE Robotics and Automation Letters (RA-L), vol. 7, iss. 3, p. 7503–7510, 2022. doi:10.1109/LRA.2022.3183245 Code: https://github.com/PRBonn/4DMOS PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/mersch2022ral.pdf

Talk by J. Rückin: Informative Path Planning for Active Learning in Aerial Semantic Map… (IROS’22)

IROS 2020 talk by Julius Rückin about the paper J. Rückin, L. Jin, F. Magistri, C. Stachniss, and M. Popović, “Informative Path Planning for Active Learning in Aerial Semantic Mapping,” in Proc. of the IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), 2022. PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/rueckin2022iros.pdf

Talk by L. Peters: Learning Mixed Strategies in Trajectory Games (RSS’22)

RSS 2022 Talk by Lasse Peters. For details see: https://lasse-peters.net/pub/lifted-games/

Talk: Supervised & Unsupervised Approaches for LiDAR Perception in Urban Environments by C Stachniss

Talk on “Supervised & Unsupervised Approaches for LiDAR-Based Perception in Urban Environments” given by Cyrill Stachniss as an Invited Talk at the Workshop on 3D-Deep Learning for Automated Driving at the IEEE Intelligent Vehicles Symposium (IV’2022) in Aachen, Germany.

Talks That Don’t Suck – A Guide to Improve Your Talks (Cyrill Stachniss)

Talks That Don’t Suck – A Guide to Improve Your Talks Lecture given by Cyrill Stachniss, March 2022 Video not shown due to copyright reasons: Walter Lewin: For the Love of Physics © MIT Open Courseware; See https://www.youtube.com/watch?v=sJG-rXBbmCc Own Examples by/with: ================ Igor Bogoslavskyi: https://www.ipb.uni-bonn.de/people/igor-bogoslavskyi/ Andres Milioto: https://www.ipb.uni-bonn.de/people/andres-milioto/ Olga Vysotska: https://www.ipb.uni-bonn.de/people/olga-vysotska/ Michelle Watt: https://science.unimelb.edu.au/engage/giving-to-science/botany-foundation/impact/professorial-chair Video Courtesy: ================ Walter Lewin: For the Love of Physics © MIT Open Courseware; https://www.youtube.com/watch?v=sJG-rXBbmCc Annalena Baerbock at the UN © euronews / United Nations; https://www.youtube.com/watch?v=82DQEnkkWF4 Image Courtesy: ================ success: pexels-sebastian-voortman-411207: Photo by Sebastian Voortman on Pexels speaking: one-against-all-g0eab2b386_1920 bordom: pexels-cottonbro-4842498 – Photo by Cottonbro on Pexels love-why: pexels-kelly-l-4036764 – Photo by Kelly L. on Pexels passionate: product-school-S3hhrqLrgYM-unsplash – Photo by Product School on Unsplash levers: lever-g0592f43e8_1920 – CC0 Public Domain, unknown phographer environment: auditorium-86197_1920 – Photo by User 12019 on Pixabay dark room: pexels-photoalexandru-10368435 – Photo by photoalexandru on Pexles lights on: pexels-jonas-kakaroto-2914421 – Photo by Jonas Kakaroto on Pexels microphone: public-speaking-3926344_1920 – Photo by lograstudio on Pixabay lavallier: technology-3183126_1920 – Photo by XX on Pixabay sitting: pexels-matheus-bertelli-3321793 – Photo by Matheus Bertelli on Pexels time: clocks-257911_1920 – Photo by Jarmoluk on Pixabay tools: tools-2145770_1920 – Photo by Maria_Domnina on Pixabay brain-ideas: pexels-shvets-production-7203727 – Photo by Shvets Production on Pexels props: pulse-3079902_1920 – Photo by Zerpixelt on Pixabay blackboard: birger-kollmeier-910261_1920 – Photo by WikimediaImages on Pixabay slides: alex-litvin-MAYsdoYpGuk-unsplash – Photo by Alex- Litvin on Unsplash slides-design: pexels-junior-teixeira-2047905 – Photo by Junior Teixeira on Pexels language-processor: pexels-andrey-matveev-5553596 – Photo by Andrey Matveev on Pexels start: pexels-yigithan-bal-5680771 – Photo by Yigithan Bal on Pexels start-strong: pexels-andrea-piacquadio-3764014 – Photo by Andrea Piacquadio on Pexels gun: pointing-gun-1632373_1920 – Photo by kerttu on Pixabay start-with-why: © Simon Sinek / Penguin icp: Image by Gerd Altmann on Pixabay group: one-against-all-g0eab2b386_1920 – Photo by Alexas_Fotos on Pixabay I have a dream: © picture-alliance/dpa resonate: © Dancy Duarte/Duarte Inc. mlk speach analysis: © Dancy Duarte/Duarte Inc. emotions: pexels-andrea-piacquadio-3812743 – Photo by Andrea Piacquadio on Pexels TED: © TED.com story: pexels-tomáš-malík-1703314 – Photo by Tomáš Malík on Pexels newman: © Paul Newman made-to-stick: Chip and Dan Heath the end: pexels-ann-h-11022640 – Photo by Ann H. on Pexels thanksful: pexels-kelly-l-2928146 – Photo by Kelly L. on Pexels love: pexels-karolina-grabowska-5713581 – Photo by Karolina Grabowska on Pexels friends-kids: caroline-hernandez-tJHU4mGSLz4-unsplash – Photo by Caroline Hernandez on Unsplash hand: hand-4661763_1920 – Photo by JacksonDavid on Pixabay look-to-audience: pexels-fauxels-3184317 – Photo by Fauxels on Pexels discuss: one-against-all-1744086_1920 – Photo by Alexas_Fotos on Pixabay speed: joshua-hoehne-TsdelDFTP6Y-unsplash – Photo by Joshua Hoehne on Unsplash waterbottle: water-bottle-2821977_1920 – Photo by Derneuemann on Pixabay ceasar: public domain (Edition des commentaires sur la guerre des Gaules et la guerre civile de Jules César, édités à Venise en 1783 par Thomas Bentley) videotape: pexels-anete-lusina-4793181 – Photo by Anete Lusina on Pexels testtalk: pexels-mikael-blomkvist-6476779 – Photo by Mikael Blomkvist on Pexels timing: stop-watch-396862_1920 – Photo by Sadia on Pixabay repeat: pexels-mithul-varshan-3023211 – Photo by Mithul Varshan on Pexels interview: william-moreland-GkWP64truqg-unsplash – Photo by William Moreland on Unsplash TED: © TED.com happy-place: by U3077844 on wikiversity.org / Andy Dean Photography Recording and Editing ================ by Lentfer Filmproduktion #UniBonn #StachnissLab #robotics #talks

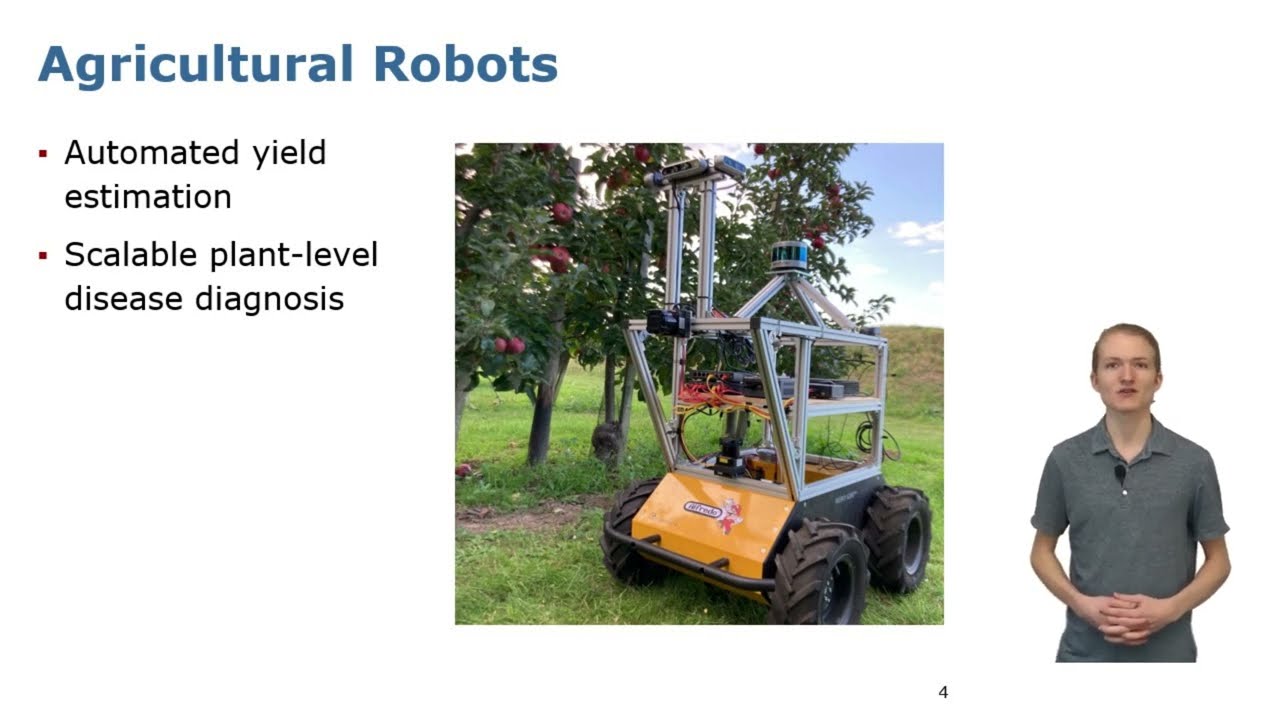

[IPPS2022 Keynote] Cyrill Stachniss – Robotics & Mobile Sensing Towards Sustainable Crop Production

Crop farming plays an essential role in our society, providing us food, feed, fiber, and fuel. We heavily rely on agricultural production but at the same time, we need to reduce the footprint of agriculture production: less input of chemicals like herbicides, fertilizer, and other limited resources. Agricultural robots and other new technologies offer promising directions to address key management challenges in agricultural fields. To achieve this, autonomous field robots need the ability to perceive and model their environment, to predict possible future developments, and to make appropriate decisions in complex and changing situations. This talk will showcase recent developments towards robot-driven sustainable crop production. I will illustrate how tasks can be automized using UAVs and UGVs as well as new ways this technology can offer. Cyrill Stachniss is a full professor at the University of Bonn and heads the Photogrammetry and Robotics Lab. He is additionally a Visiting Professor in Engineering at the University of Oxford. Before his appointment in Bonn, he was with the University of Freiburg and the Swiss Federal Institute of Technology. Since 2010 a Microsoft Research Faculty Fellow and received the IEEE RAS Early Career Award in 2013. From 2015-2019, he was senior editor for the IEEE Robotics and Automation Letters. Together with his colleague Heiner Kuhlmann, he is a spokesperson of the DFG Cluster of Excellence “PhenoRob” at the University of Bonn. In his research, he focuses on probabilistic techniques for mobile robotics, perception, and navigation. The main application areas of his research are autonomous service robots, agricultural robotics, and selfdriving cars. He has co-authored over 250 publications, has won several best paper awards, and has coordinated multiple large research projects on the national and European level.

Katie Driggs-Campbell, University of Illinois at Urbana-Champaign (06.04.2022)

Katie Driggs-Campbell gives a talk on “Robust Navigation for Agricultural Robots via Anomaly Detection” as part of the PhenoRob Seminar Series.

Neural Network Basics for Image Interpretation by C. Stachniss (PILS Lecture)

Neural Network Basics for Image Interpretation by Cyrill Stachniss. The PhenoRob Interdisciplinary Lecture Series called PILS is a series of lectures of up to 30 min explaining key concepts to people from other fields. The series is part of the Cluster of Excellence PhenoRob at the University of Bonn. #UniBonn #StachnissLab #robotics #learning #neuralnetworks

Wolfram Burgard, Giorgio Grisetti, and Cyrill Stachniss: Graph-based SLAM in 20 Minutes

This is a short video lecture by Wolfram, Giorgio, and Cyrill explaining SLAM with pose graphs in 20 min. Tutorial paper: http://www.informatik.uni-freiburg.de/~stachnis/pdf/grisetti10titsmag.pdf #UniBonn #StachnissLab #slam #lecture

Talk by B. Mersch: Self-supervised Point Cloud Prediction Using 3D Spatio-temporal CNNs

B. Mersch, X. Chen, J. Behley, and C. Stachniss, “Self-supervised Point Cloud Prediction Using 3D Spatio-temporal Convolutional Networks,” in Proc. of the Conf. on Robot Learning (CoRL), 2021. PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/mersch2021corl.pdf Code: https://github.com/PRBonn/point-cloud-prediction #UniBonn #StachnissLab #robotics #autonomouscars

Cyrill Stachniss | Dynamic Environments in Least Squares SLAM | Tartan SLAM Series

Presentation by Cyrill Stachniss as part of the Tartan SLAM Series. Series overviews and links can be found on our webpage: https://theairlab.org/tartanslamseries2/

Talk by X. Chen: Moving Object Segmentation in 3D LiDAR Data: A Learning Approach (IROS & RAL’21)

X. Chen, S. Li, B. Mersch, L. Wiesmann, J. Gall, J. Behley, and C. Stachniss, “Moving Object Segmentation in 3D LiDAR Data: A Learning-based Approach Exploiting Sequential Data,” IEEE Robotics and Automation Letters (RA-L), vol. 6, pp. 6529-6536, 2021. doi:10.1109/LRA.2021.3093567 #UniBonn #StachnissLab #robotics #autonomouscars #neuralnetworks #talk

Talk by B. Mersch: Maneuver-based Trajectory Prediction for Self-driving Cars Using … (IROS’21)

B. Mersch, T. Höllen, K. Zhao, C. Stachniss, and R. Roscher, “Maneuver-based Trajectory Prediction for Self-driving Cars Using Spatio-temporal Convolutional Networks,” in Proceedings of the IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), 2021. Paper: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/mersch2021iros.pdf #UniBonn #StachnissLab #robotics #autonomouscars #neuralnetworks #talk

RSS’21: Inferring Objectives in Continuous Dynamic Games from Noise-Corrupted … by Peters et al.

Short Talk (4 min) about the work: L. Peters, D. Fridovich-Keil, V. Rubies-Royo, C. J. Tomlin, and C. Stachniss, “Inferring Objectives in Continuous Dynamic Games from Noise-Corrupted Partial State Observations,” in Proceedings of Robotics: Science and Systems (RSS), 2021. Code: https://github.com/PRBonn/PartiallyObservedInverseGames.jl Paper: https://arxiv.org/pdf/2106.03611 #UniBonn #StachnissLab #robotics #talk

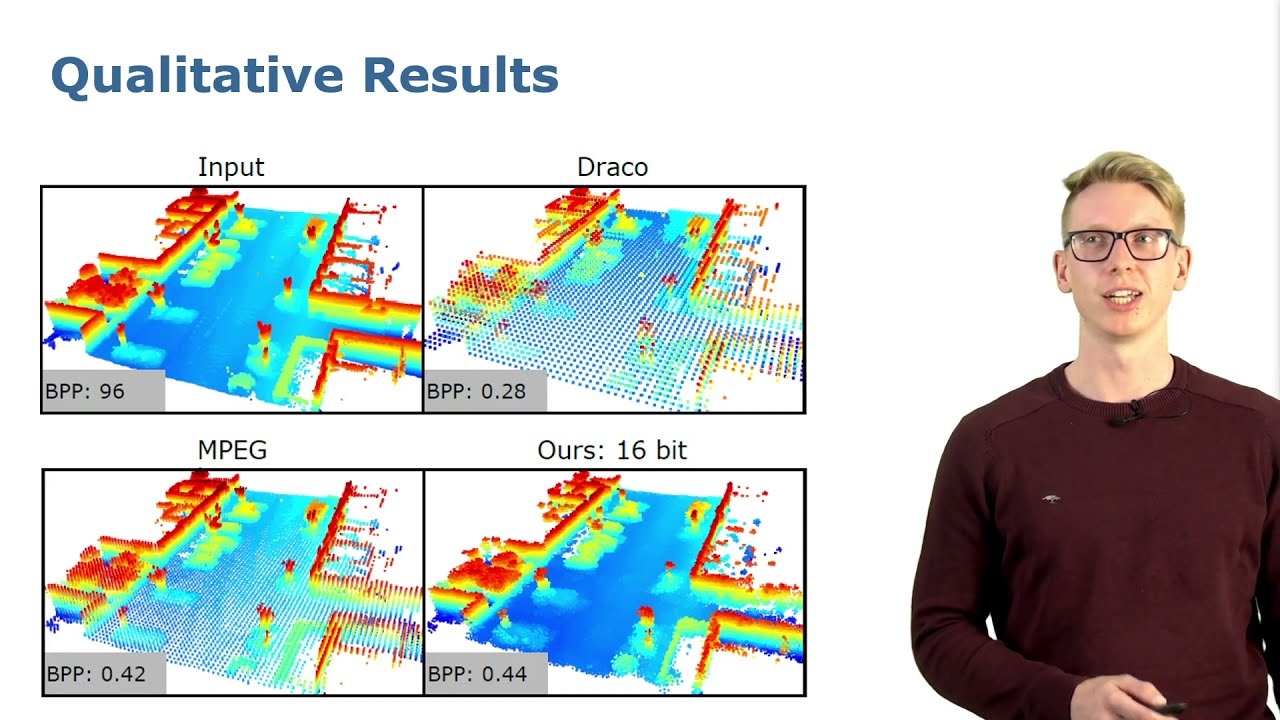

Talk by L. Wiesmann: Deep Compression for Dense Point Cloud Maps (RAL-ICRA 2021)

L. Wiesmann, A. Milioto, X. Chen, C. Stachniss, and J. Behley, “Deep Compression for Dense Point Cloud Maps,” IEEE Robotics and Automation Letters (RA-L), vol. 6, pp. 2060-2067, 2021. doi:10.1109/LRA.2021.3059633 Code: https://github.com/PRBonn/deep-point-map-compression Paper: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/wiesmann2021ral.pdf #UniBonn #StachnissLab #robotics #autonomouscars #neuralnetworks #talk

Talk by F. Magistri: Phenotyping Exploiting Differentiable Rendering with Self-Consistency (ICRA’21)

F. Magistri, N. Chebrolu, J. Behley, and C. Stachniss, “Towards In-Field Phenotyping Exploiting Differentiable Rendering with Self-Consistency Loss,” in Proceedings of the IEEE Int. Conf. on Robotics & Automation (ICRA), 2021. Paper: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/magistri2021icra.pdf #UniBonn #StachnissLab #robotics #PhenoRob #neuralnetworks #talk

Talk by I. Vizzo: Poisson Surface Reconstruction for LiDAR Odometry and Mapping (ICRA’21)

I. Vizzo, X. Chen, N. Chebrolu, J. Behley, and C. Stachniss, “Poisson Surface Reconstruction for LiDAR Odometry and Mapping,” in Proceedings of the IEEE Int. Conf. on Robotics & Automation (ICRA), 2021. Paper: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/vizzo2021icra.pdf Code: https://github.com/PRBonn/puma #UniBonn #StachnissLab #robotics #autonomouscars #slam #talk

Talk by J. Weyler: Joint Plant Instance Detection and Leaf Count Estimation … (RAL+ICRA’21)

J. Weyler, A. Milioto, T. Falck, J. Behley, and C. Stachniss, “Joint Plant Instance Detection and Leaf Count Estimation for In-Field Plant Phenotyping,” IEEE Robotics and Automation Letters (RA-L), vol. 6, pp. 3599-3606, 2021. doi:10.1109/LRA.2021.3060712 #UniBonn #StachnissLab #robotics #PhenoRob #neuralnetworks #talk

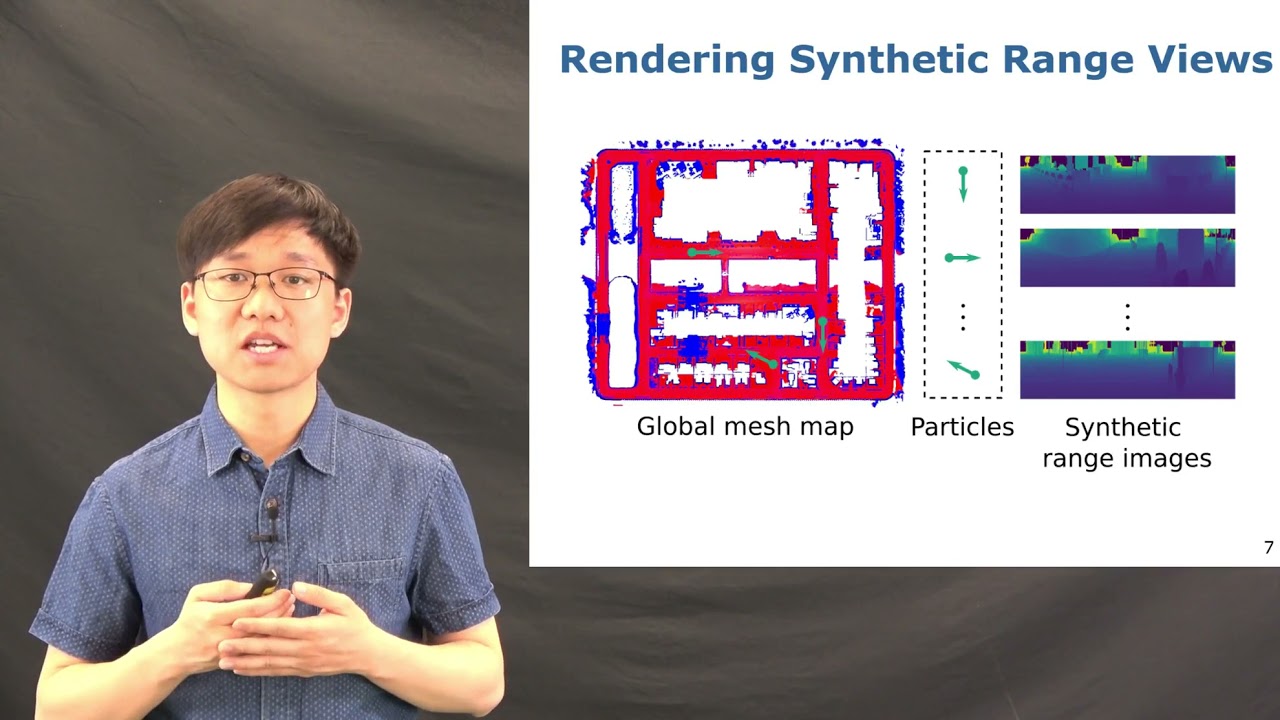

Talk by X. Chen: Range Image-based LiDAR Localization for Autonomous Vehicles (ICRA’21)

X. Chen, I. Vizzo, T. Läbe, J. Behley, and C. Stachniss, “Range Image-based LiDAR Localization for Autonomous Vehicles,” in Proceedings of the IEEE Int. Conf. on Robotics & Automation (ICRA), 2021. Paper: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/chen2021icra.pdf Code: https://github.com/PRBonn/range-mcl #UniBonn #StachnissLab #robotics #autonomouscars #neuralnetworks #talk

Talk by A. Reinke: Simple But Effective Redundant Odometry for Autonomous Vehicles (ICRA’21)

A. Reinke, X. Chen, and C. Stachniss, “Simple But Effective Redundant Odometry for Autonomous Vehicles,” in Proceedings of the IEEE Int. Conf. on Robotics & Automation (ICRA), 2021. PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/reinke2021icra.pdf Code: https://github.com/PRBonn/MutiverseOdometry #UniBonn #StachnissLab #robotics #autonomouscars #talk

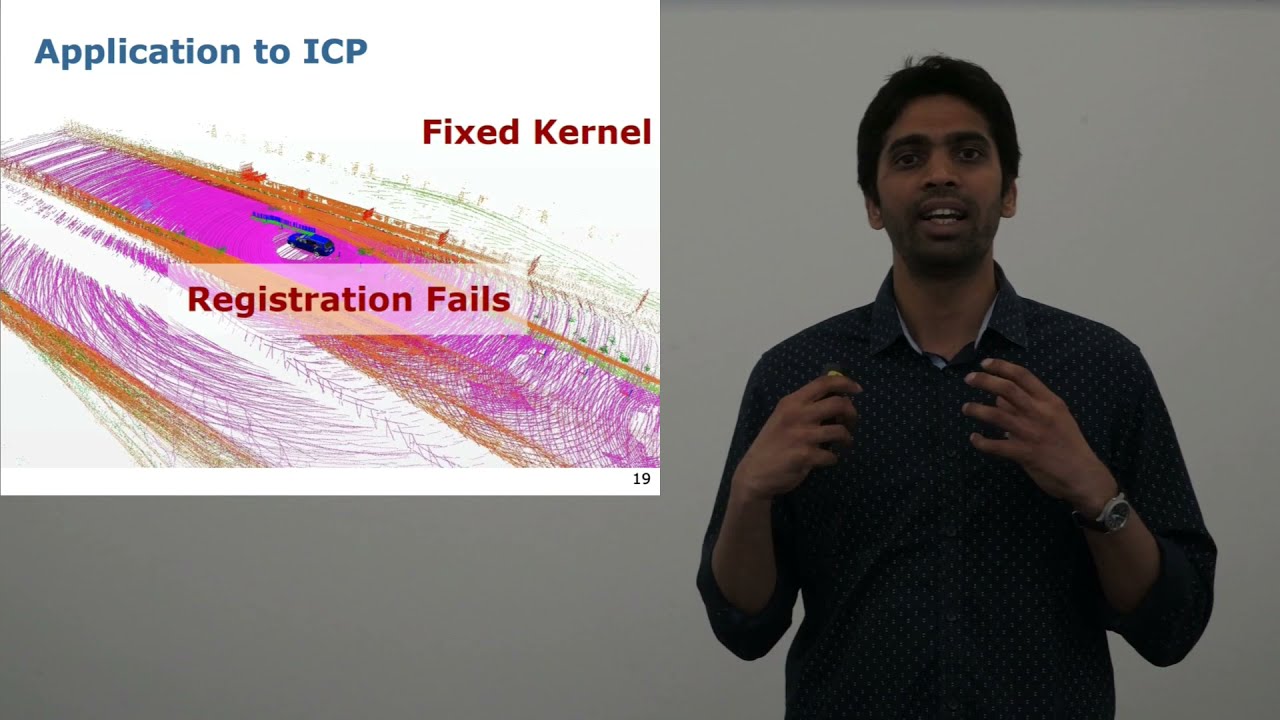

Talk by N. Chebrolu: Adaptive Robust Kernels for Non-Linear Least Squares Problems (RAL+ICRA’21)

N. Chebrolu, T. Läbe, O. Vysotska, J. Behley, and C. Stachniss, “Adaptive Robust Kernels for Non-Linear Least Squares Problems,” IEEE Robotics and Automation Letters (RA-L), vol. 6, pp. 2240-2247, 2021. doi:10.1109/LRA.2021.3061331 https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/chebrolu2021ral.pdf #UniBonn #StachnissLab #robotics #autonomouscars #slam #talk

What is the SIFT Algorithm ? | CLICK 3D EP. 17 | ft. Cyrill Stachniss

Do you know what is the SIFT algorithm? The scale-invariant feature transform (SIFT) is a feature detection algorithm in computer vision to detect and describe local features in images. Applications include object recognition, robotic mapping and navigation, image stitching, 3D modeling, gesture recognition, video tracking, individual identification of wildlife and match moving. . Eugene Liscio from ai2- 3D talks with Cyrill Stachniss – about the SIFT algorithm. . Are you into Photogrammetry and 3D models? Watch these other videos for more tips! 5 Common Mistakes when photographing for photogrammetry https://youtu.be/SzobKDdghGo Can I use video to create 3D models? https://youtu.be/kT9BTdaTFPU Creating 3D model of footwear impressions https://youtu.be/miIq-oid_CM Virtual Reality uses for Forensics – ft. Alex Harvey from RiVR https://youtu.be/RIDvUm2li1I How to document a rapidly deteriorating crime scene? | Photogrammetry | Create Realistic 3D models https://youtu.be/ambB69DfmXE . Still haven’t subscribed to ForensicAnimations on YouTube? ►► https://www.youtube.com/user/Forensic… . Follow us on Instagram @ai2_3d Follow us on Linkedin https://www.linkedin.com/company/ai2_3d . #forensicscience #forensics #forensicfiles #forensic #photogrammetry #3dmodeling #vr #crimesceneinvestigator #csi #laserscanner #laserscanning

Keynote Talk by C. Stachniss on LiDAR-based SLAM using Geometry and Semantics … (ITSC’20 SLAM-WS)

Keynote Talk by C. Stachniss on LiDAR-based SLAM using Geometry and Semantics for Self-driving Cars given at the IEEE International Conference on Intelligent Transportation Systems SLAM Workshop, 2020

Talk by F. Magistri: Segmentation-Based 4D Registration of Plants Point Clouds (IROS’20)

Paper: F. Magistri, N. Chebrolu, and C. Stachniss, “Segmentation-Based 4D Registration of Plants Point Clouds for Phenotyping,” in Proceedings of the IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), 2020. PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/magistri2020iros.pdf

Talk by D. Gogoll: Unsupervised Domain Adaptation for Transferring Plant Classification…(IROS’20)

D. Gogoll, P. Lottes, J. Weyler, N. Petrinic, and C. Stachniss, “Unsupervised Domain Adaptation for Transferring Plant Classification Systems to New Field Environments, Crops, and Robots,” in Proceedings of the IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), 2020. PAPER: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/gogoll2020iros.pdf

Talk by J. Behley on Domain Transfer for Semantic Segmentation of LiDAR Data using DNNs… (IROS’20)

F. Langer, A. Milioto, A. Haag, J. Behley, and C. Stachniss, “Domain Transfer for Semantic Segmentation of LiDAR Data using Deep Neural Networks,” in Proceedings of the IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), 2020. PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/langer2020iros.pdf

Talk by X. Chen on Learning an Overlap-based Observation Model for 3D LiDAR Localization (IROS’20)

X. Chen, T. Läbe, L. Nardi, J. Behley, and C. Stachniss, “Learning an Overlap-based Observation Model for 3D LiDAR Localization,” in Proceedings of the IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), 2020. PAPER: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/chen2020iros.pdf CODE: https://github.com/PRBonn/overlap_localization

Keynote Talk by C. Stachniss: Map-based Localization for Autonomous Driving (ECCV 2020 Workshops)

Invited Talk by Cyrill Stachniss ECCV 2020 Workshop on Map-based Localization for Autonomous Driving https://sites.google.com/view/mlad-eccv2020

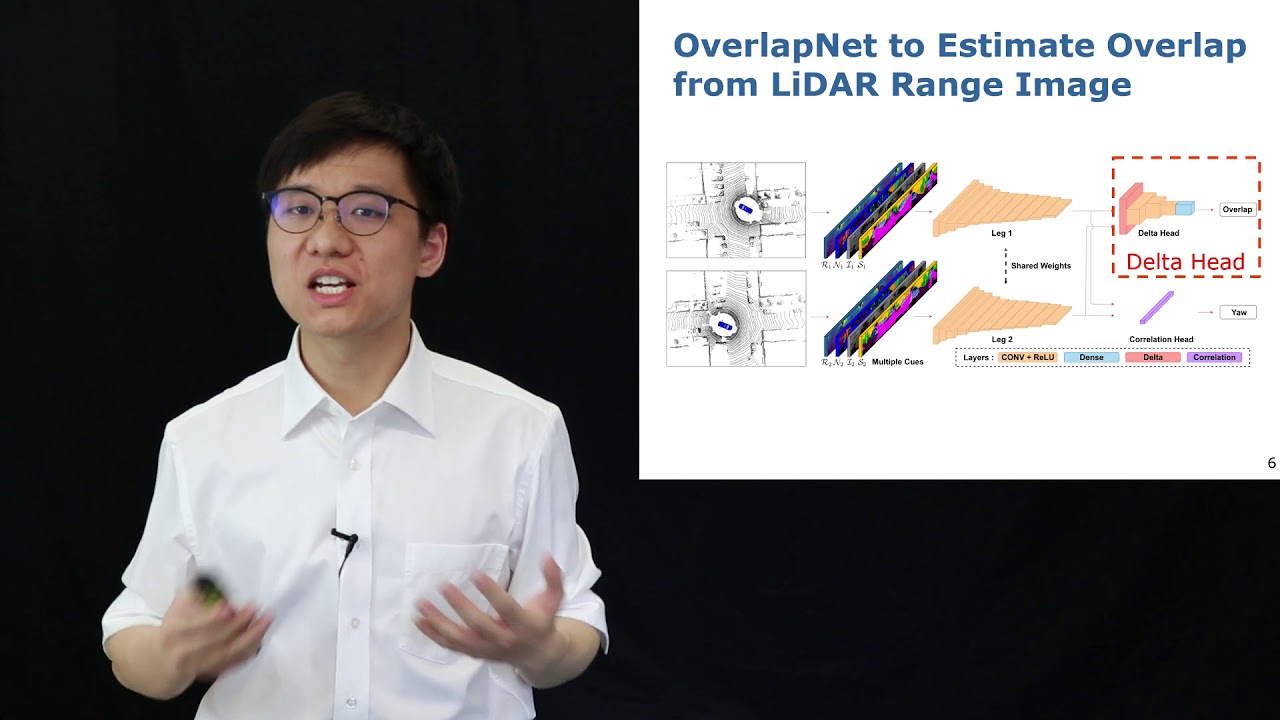

Talk by X. Chen on OverlapNet – Loop Closing for LiDAR-based SLAM (RSS’20)

Talk for the RSS 2020 paper: X. Chen, T. Läbe, A. Milioto, T. Röhling, O. Vysotska, A. Haag, J. Behley, and C. Stachniss, “OverlapNet: Loop Closing for LiDAR-based SLAM,” in Proceedings of Robotics: Science and Systems (RSS), 2020. Paper: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/chen2020rss.pdf Code available: https://github.com/PRBonn/OverlapNet

Keynote Talk by C. Stachniss: Robots in the Field – Towards Sustainable Crop Production (ICRA’20)

Robots in the Fields: Directions Towards Sustainable Crop Production ICRA 2020 Keynote Talk by Cyrill Stachniss International Conference on Robotics and Automation, Paris, 2020 Abstract: Food, feed, fiber, and fuel: Crop farming plays an essential role for the future of humanity and our planet. We heavily rely on agricultural production and at the same time, we need to reduce the footprint of agriculture production: less input of chemicals like herbicides and fertilizer and other limited resources. Simultaneously, the decline in arable land and climate change pose additional constraints like drought, heat, and other extreme weather events. Robots and other new technologies offer promising directions to tackle different management challenges in agricultural fields. To achieve this, autonomous field robots need the ability to perceive and model their environment, to predict possible future developments, and to make appropriate decisions in complex and changing situations. This talk will showcase recent developments towards robot-driven sustainable crop production. We will illustrate how certain management tasks can be automized using UAVs and UGVs and which new ways this technology offers. Among work conducted in collaborative European projects, the talk covers ongoing developments of the Cluster of Excellence “PhenoRob – Robotics and Phenotyping for Sustainable Crop Production” and some of our current exploitation activities.

Talk by N. Chebrolu on Spatio-Temporal Non-Rigid Registration of 3D Point Clouds of Plants (ICRA’20)

ICRA 2020 talk about the paper: N. Chebrolu, T. Laebe, and C. Stachniss, “Spatio-Temporal Non-Rigid Registration of 3D Point Clouds of Plants,” in Proceedings of the IEEE Int. Conf. on Robotics & Automation (ICRA), 2020. https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/chebrolu2020icra.pdf

Talk by L. Nardi on Long-Term Robot Navigation in Indoor Environments… (ICRA’20)

ICRA 2020 talk about the paper: L. Nardi and C. Stachniss, “Long-Term Robot Navigation in Indoor Environments Estimating Patterns in Traversability Changes,” in Proceedings of the IEEE Int. Conf. on Robotics & Automation (ICRA), 2020. PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/nardi2020icra.pdf Discussion Slack Channel: https://icra20.slack.com/app_redirect?channel=moa08_1

Talk by J. Quenzel on Beyond Photometric Consistency: Gradient-based Dissimilarity for VO (ICRA’20)

ICRA 2020 talk about the paper: J. Quenzel, R. A. Rosu, T. Laebe, C. Stachniss, and S. Behnke, “Beyond Photometric Consistency: Gradient-based Dissimilarity for Improving Visual Odometry and Stereo Matching,” in Proceedings of the IEEE Int. Conf. on Robotics & Automation (ICRA), 2020. PDF: http://www.ipb.uni-bonn.de/pdfs/quenzel2020icra.pdf

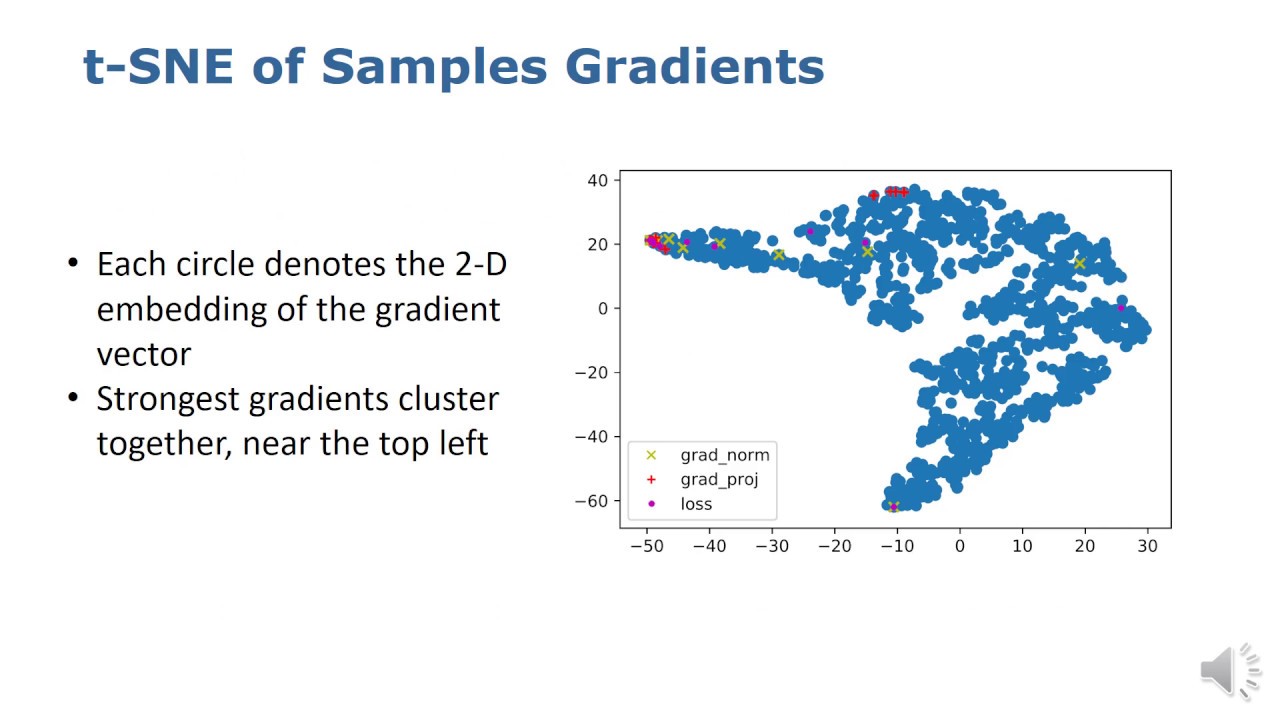

Talk by R. Sheikh on Gradient and Log-based Active Learning for Semantic Segmentation… (ICRA’20)

ICRA 2020 talk about the paper: R. Sheikh, A. Milioto, P. Lottes, C. Stachniss, M. Bennewitz, and T. Schultz, “Gradient and Log-based Active Learning for Semantic Segmentation of Crop and Weed for Agricultural Robots,” in Proceedings of the IEEE Int. Conf. on Robotics & Automation (ICRA), 2020. PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/sheikh2020icra.pdf

Talk by A. Ahmadi on Visual Servoing-based Navigation for Monitoring Row-Crop Fields (ICRA’20)

ICRA 2020 talk about the paper: A. Ahmadi, L. Nardi, N. Chebrolu, and C. Stachniss, “Visual Servoing-based Navigation for Monitoring Row-Crop Fields,” in Proceedings of the IEEE Int. Conf. on Robotics & Automation (ICRA), 2020. PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/ahmadi2020icra.pdf CODE: https://github.com/PRBonn/visual-crop-row-navigation

Achim Walter – Digitalization and Sustainability: The Role of Technology (DIGICROP 2020 Keynote)

International Conference on Digital Technologies for Sustainable Crop Production (DIGICROP 2020) • November 1-10, 2020 • http://digicrop.de/

Nived Chebrolu – Spatio-temporal registration of plant point clouds for phenotyping (Talk)

International Conference on Digital Technologies for Sustainable Crop Production (DIGICROP 2020) • November 1-10, 2020 • http://digicrop.de/

Jan Weyler – Joint Plant Instance Detection and Leaf Count Estimation for In-Field Plant Phenotyping

International Conference on Digital Technologies for Sustainable Crop Production (DIGICROP 2020) • November 1-10, 2020 • http://digicrop.de/

D. Gogoll – Unsupervised Domain Adaptation for Transferring Plant Classification Systems

International Conference on Digital Technologies for Sustainable Crop Production (DIGICROP 2020) • November 1-10, 2020 • http://digicrop.de/